What are contextual bandits? The AI behind smarter, real-time personalization

Published on December 11, 2025/Last edited on December 11, 2025/7 min read

Team Braze

Contents

Marketers have always looked to testing to guide smarter decisions. Multi-armed bandits improved on static A/B tests by balancing exploration and exploitation—learning which version performs best while campaigns run. But they treat every customer the same, searching for a single winning option.

Contextual bandits take that logic further. They add context—factors like behavior, location, device, or past engagement—and use predictive decisioning to determine the “next best everything” for each individual. This means learning doesn’t stop once a winner is found. It continues in real time, adapting to every interaction and helping to motivate a customer to take the next step.

By connecting experimentation with AI personalization, contextual bandits help brands turn every customer touchpoint into a learning opportunity.

What is a contextual bandit?

A contextual bandit is an AI decisioning model rooted in reinforcement learning. It connects customer context to marketing action, learning which message, offer, or channel will perform best for each individual by analyzing real-time data—such as behavior, device, or timing—and continuously updating decisions as new information comes in. This creates a dynamic system that personalizes engagement in real time and replaces manual testing with continuous learning.

Contextual bandits vs. multi-armed bandits: what’s the difference?

Both approaches balance learning and performance, but they make decisions in different ways. A multi-armed bandit learns which overall option performs best—like finding one winning campaign message—by gradually sending more traffic to the top performer. It’s faster than A/B testing but still focuses on one “best” version for everyone.

A contextual bandit goes further. It considers each customer’s unique situation before deciding what to show, using real-time data such as device, time of day, or past interactions. Instead of identifying one winner, it continuously learns which message, offer, or channel performs best for each person.

Key differences in data input and learning speed

Multi-armed bandits depend on aggregated campaign data, which means insights develop over time. Contextual bandits, however, rely on live context signals from each interaction. Every new data point helps refine future decisions—accelerating the learning cycle and reducing lag between insight and action.

Why context makes marketing applications more accurate

Adding context makes every prediction sharper. Rather than generalizing results across audiences, contextual bandits analyze variables that influence engagement: location, recency, preference, and behavior. This level of granularity lets marketers tailor communications precisely—matching messages to moments, not just segments.

Why contextual bandits matter for modern marketing

Contextual bandits, a practical form of reinforcement learning, take marketing from static experiments to adaptive optimization systems that learn continuously. They optimize campaigns in real time, responding to changing behavior and market conditions as they happen.

Moving from testing to continuous learning

Traditional testing delivers insights after the fact. Contextual bandits learn as they go, allowing marketers to act on new data immediately. Each interaction feeds into the next decision, creating an ongoing loop of learning that sharpens performance with every campaign.

Real-time adaptation to user behavior

Customer intent fluctuates with time, channel, and context. A contextual bandit monitors those changes and adjusts automatically. For instance, if a customer starts engaging more with mobile push than email, the model will favor push in the next interaction, keeping communication aligned with evolving preferences.

Reducing wasted impressions and increasing conversions

By continuously redirecting budget and attention toward higher-performing variants, contextual bandits cut down on underperforming sends and impressions. Campaigns reach the right audience with relevant content faster, helping to improve both efficiency and conversion rates.

For marketers, this creates a measurable advantage—fewer delays between insight and impact, and more opportunities to connect with customers in the moments that matter.

Contextual bandit models in Braze rely on a foundation designed with robust security and privacy features, as well as model guardrails, enabling customers to leverage their data responsibly.

Real-world marketing applications of contextual bandits

Contextual bandits translate machine learning theory into everyday marketing action. They continuously learn from live interactions, deciding which message, channel, or creative variation will resonate best for each individual customer in real time. Here’s how brands can apply this adaptive approach across campaigns.

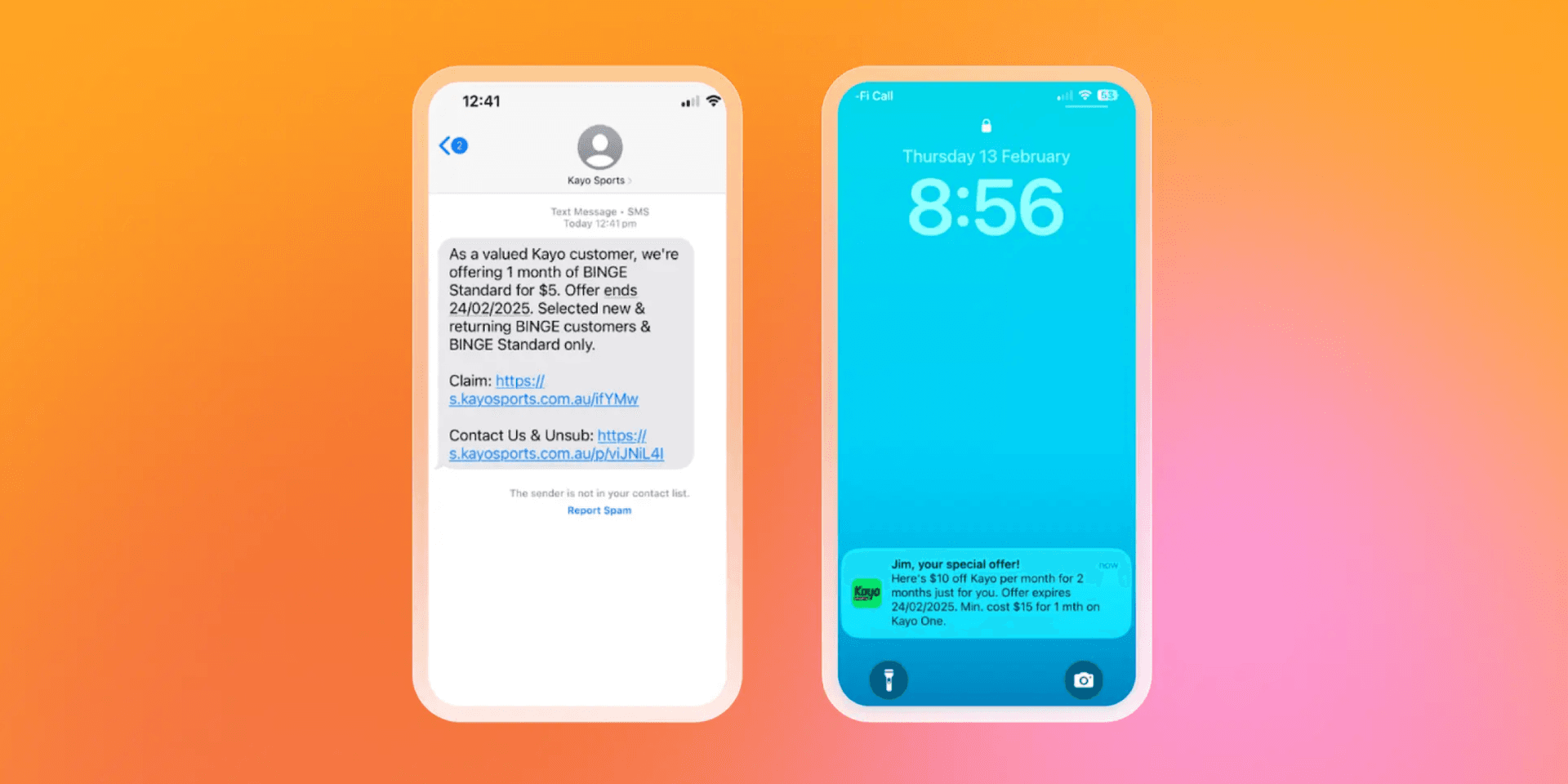

Personalizing message timing and channel

Customers engage on their own schedules and preferred channels—and those preferences shift constantly. A contextual bandit model learns when and where each person is most likely to respond. Over time, it discovers patterns like one customer opening push notifications on weekday mornings and another engaging more through email in the evenings.

Example: A retail brand uses contextual bandit decisioning to personalize its daily deal alerts. The model tracks each shopper’s past interactions and sends the next offer through the channel and at the moment that person is most likely to engage. For one shopper, that could mean an evening push notification; for another, an early-morning email. Every individual’s history continuously updates future decisions.

Optimizing promotional offers dynamically

Instead of promoting the same offer to every audience, a contextual bandit tailors the incentive to each person’s live context. It learns which offers drive the highest likelihood of conversion for that specific user and refines its predictions as behavior changes.

Example: A travel brand uses contextual bandit logic to choose between several offers—discounts, upgrades, or loyalty rewards—at the individual level. A traveler who often books last-minute trips might receive a spontaneous upgrade, while another who values flexibility could be offered free cancellation. Each user’s choice feeds the model, so their next offer becomes even more relevant.

Improving creative selection with user-level context

Contextual bandits also adapt creative elements for each person, not just by audience type. They learn which tone, visual, or layout resonates most for every individual, adjusting creative combinations automatically.

Example: A streaming service uses contextual bandit decisioning to optimize promotional banners. For one subscriber, the model might prioritize bold visuals featuring their favorite genre; for another, a quieter layout highlighting new releases. Each impression updates the system, shaping the next creative decision in real time.

Kayo Sports: Continuous learning boosts engagement

Kayo Sports is the largest and fastest growing sports streaming service in Australia. Over 50 sports are streamed live and on demand through their platform with thousands of hours of entertainment and documentaries available in addition to sports content.

The challenge

Kayo Sports wanted to increase engagement among millions of subscribers with diverse interests. Traditional testing was too slow to keep up with fast-changing content cycles and audience behavior.

The strategy

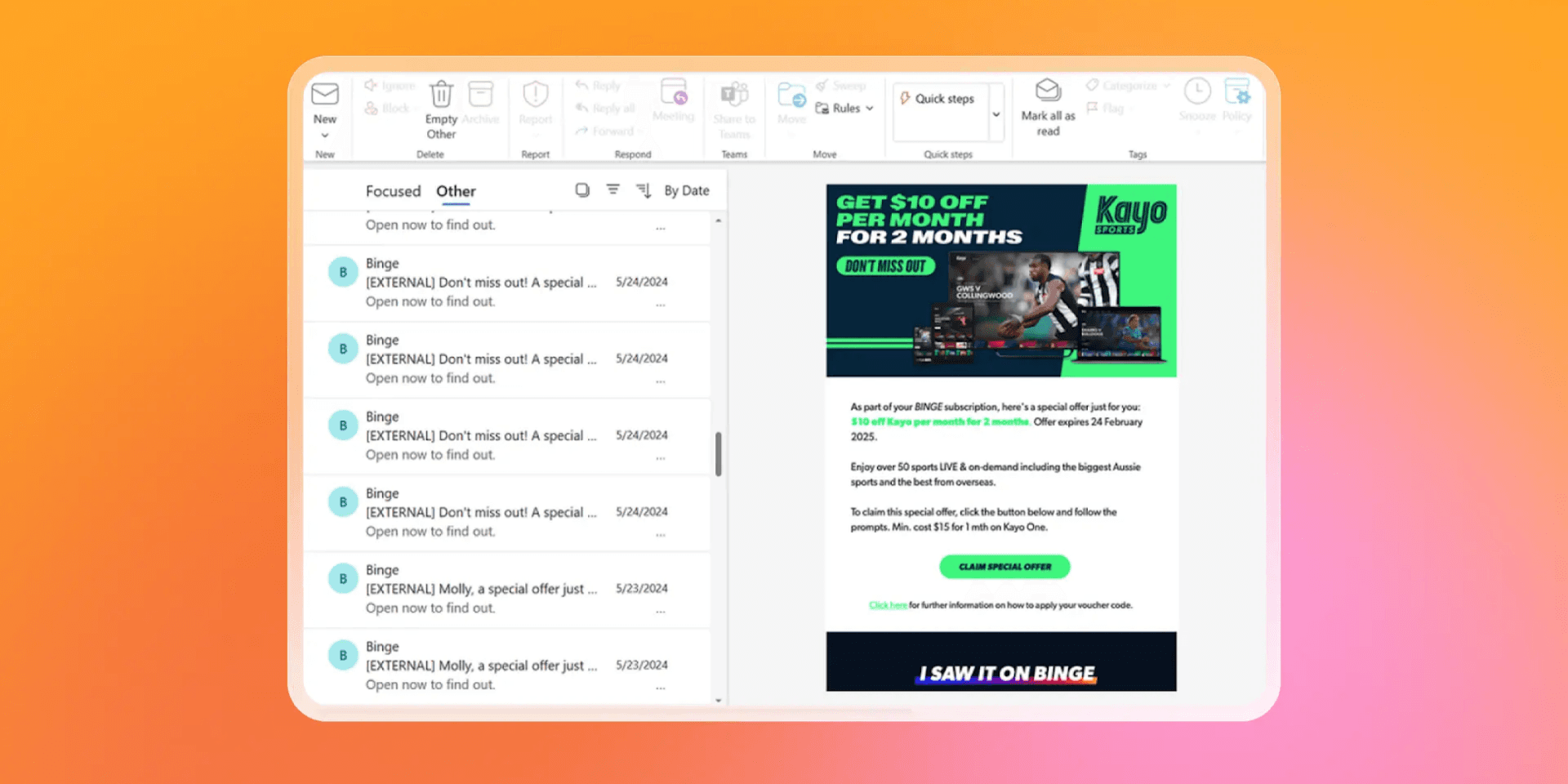

Using BrazeAI Decisioning Studio™ and Canvas, Kayo adopted contextual bandit logic to optimize in-app and push notifications. The solution evaluated contextual factors like viewing history, device, and timing to determine which message—highlighting upcoming games, subscription reminders, or new features—was most likely to engage each user.

The wins

Kayo saw faster learning cycles, higher message open rates, and improved retention among active subscribers.

- 14% increase in customers reactivating within 12 months of churning

- 8% increase in average annual occupancy

- 105% increase in cross-selling to BINGE, another streaming service in the Foxtel portfolio

Campaigns optimized themselves in near real time, allowing the team to focus on creative strategy instead of manual test management.

How Braze brings contextual bandits to life

Contextual bandits are powerful in theory, but their real value comes from how brands apply them. Within Braze, they’re not a feature you toggle on—they’re part of how AI decisioning and orchestration work together to create personalized, adaptive experiences at scale.

AI decisioning with BrazeAI Decisioning Studio™ and Canvas

BrazeAI Decisioning Studio™ uses the logic of the contextual bandit algorithm to determine the next best everything for each user—selecting the right message, offer, or channel based on real-time context. Within Canvas, marketers can orchestrate these adaptive decisions across journeys, so every interaction becomes part of a continuous feedback loop.

Continuous learning through streaming data

Because Braze operates on live, event-based data, the model updates in the moment—not in delayed batches. As customer behavior shifts, so do campaign responses. This makes experimentation and AI personalization happen simultaneously, giving marketers confidence that every touchpoint reflects the most recent engagement data.

Real-time orchestration for personalized campaigns

Contextual bandit logic comes to life when combined with Braze orchestration. Decisions made in BrazeAI Decisioning Studio™ can trigger personalized flows in Canvas, so messages are delivered through the best channel, at the right time, and with the most relevant creative. This creates a seamless customer experience—and a system that never stops learning.

See how AI decisioning solutions use contextual bandit logic to personalize every interaction in real time.

FAQs about contextual bandits

What are contextual bandits and how do they work?

Contextual bandits are AI decisioning models that use real-time data—like behavior, preferences, or timing—to choose the best message, channel, or offer for each user. They learn continuously, adjusting after every interaction.

What’s the difference between contextual bandits and multi-armed bandits?

Multi-armed bandits find one winning version for everyone, while contextual bandits personalize decisions for each individual using real-time context. This makes them better suited for adaptive, one-to-one marketing.

How do contextual bandits apply to marketing and personalization?

Contextual bandits apply to marketing by turning campaigns into live learning systems that personalize every interaction in real time. They decide when, where, and how to engage based on what’s most likely to drive action.

What data is needed for contextual bandit algorithms to succeed?

For contextual bandit algorithms to succeed, they need accurate, consented data such as engagement history, recency, and device type. Braze AI Decisioning Studio™ uses streaming data to keep personalization timely and context-aware.

How does Braze use contextual bandits to improve engagement?

Braze uses contextual bandits to improve engagement through BrazeAI Decisioning Studio™ and Canvas. These solutions analyze customer behavior, choose the next best action, and adapt campaigns in real time—driving stronger engagement without manual testing.

Related Tags

Be Absolutely Engaging.™

Sign up for regular updates from Braze.

Related Content

Article3 min read

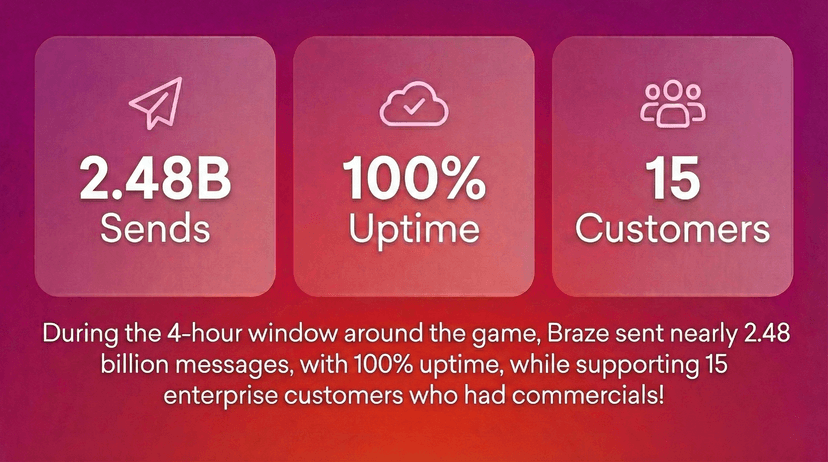

Article3 min read2.4+ billion sends, zero fumbles: How Braze supports leading brands during the big game

February 09, 2026 Article4 min read

Article4 min readBeyond Predictions: Why Your Personalization Strategy Needs an AI Decisioning Agent

February 09, 2026 Article6 min read

Article6 min readThe OS and inbox as intermediary: How AI is (literally) rewriting customer engagement

February 06, 2026