AI experimentation platform for measurable marketing wins

Published on October 31, 2025/Last edited on October 31, 2025/15 min read

Team Braze

More than 80% of AI projects fail to deliver measurable impact—a statistic that underscores why structured online experimentation for AI matters. Offline model training and long release cycles miss the complexity of live customer behavior. What looks promising in development often unravels in production.

An AI experimentation platform changes this by making it possible to test safely in real time, using live signals and guardrails to guide decisions. Marketers can validate prompts, models, and engagement strategies directly with customers, instead of gambling on assumptions, and then adapt based on evidence.

By tying every experiment to KPIs like click-through rate (CTR), conversion rate (CVR), retention, and customer lifetime value (CLV), AI stops being a shiny object and becomes a disciplined driver of business outcomes.

In this article, we’ll look at what an AI experimentation platform can help brands achieve, what to actually test, and methods you can use today. We’ll also share some real-life examples of successful AI experimentation in action.

Contents

What is an AI experimentation platform

Benefits of AI experimentation

A framework for the AI experimentation loop

Methods that fit AI experimentation

Marketing use cases and outcomes

How online experimentation for AI works in practice

Best practices for AI experimentation

Final thoughts on AI experimentation

What is an AI experimentation platform?

An AI experimentation platform is a system that lets marketers test, evaluate, and continuously improve AI models, prompts, and decisions by running controlled experiments in real time.

Instead of guessing what will work, you can expose small groups of customers to different variations—whether it’s copy, offers, or model outputs—measure their responses, and use that data to guide the next step.

Unlike traditional A/B testing, which applies the same variation across broad segments, AI experimentation adapts at the individual level.

Benefits of AI experimentation

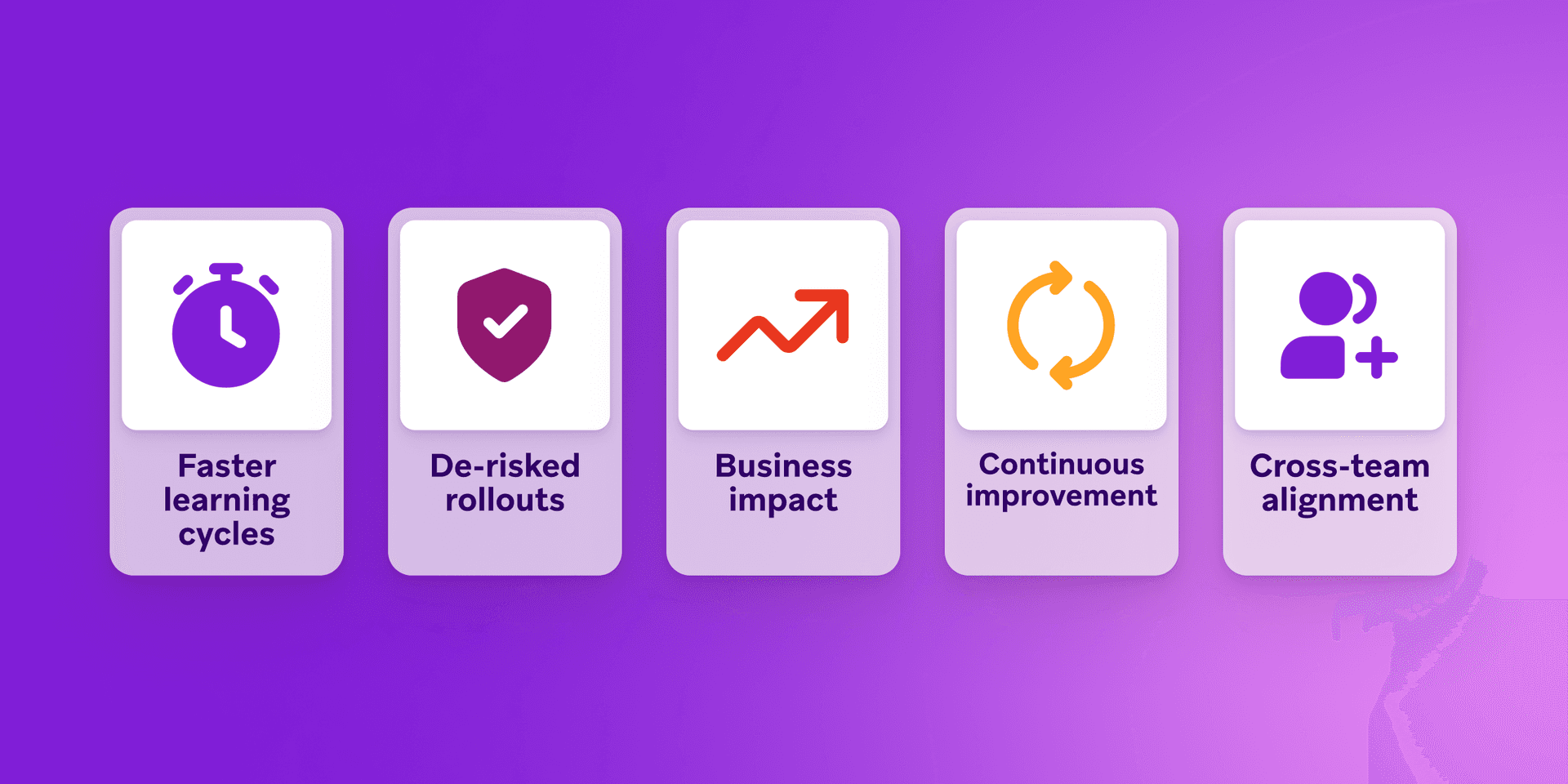

The value of an AI experimentation platform comes from creating a safer, smarter way to uncover what drives business outcomes. Key benefits include:

- Faster learning cycles: Test prompts, models, or offers in days instead of months, and get insights from real customer interactions.

- De-risked rollouts: Use guardrails and controlled exposure strategies to limit risk while you learn what works.

- Business impact: Tie every experiment to KPIs like CTR, CVR, retention, and CLV so outcomes connect directly to revenue and growth.

- Continuous improvement: Each test builds on the last, creating a compounding knowledge base that strengthens decision-making.

- Cross-team alignment: Experimentation gives marketing, product, and data teams a shared way to evaluate ideas and act on evidence.

What marketers actually test

An AI experimentation platform can be applied to nearly every part of the customer journey, helping brands map what matters. The most common areas marketers test include:

Prompt experimentation

Different prompts or copy variants can be tested for tone, style, or relevance. What resonates with one segment may fall flat with another, and live experiments help reveal which messages drive engagement.

Model experimentation

Marketers can compare different AI models or parameter sets to see which provides the best predictions or responses. Running models in parallel, sometimes as shadow tests, helps teams identify winners before scaling.

Offer logic

Experiments can compare incentives such as discounts, free shipping, or loyalty perks. By measuring both conversion and margin impact, marketers can fine-tune offers for sustainable growth.

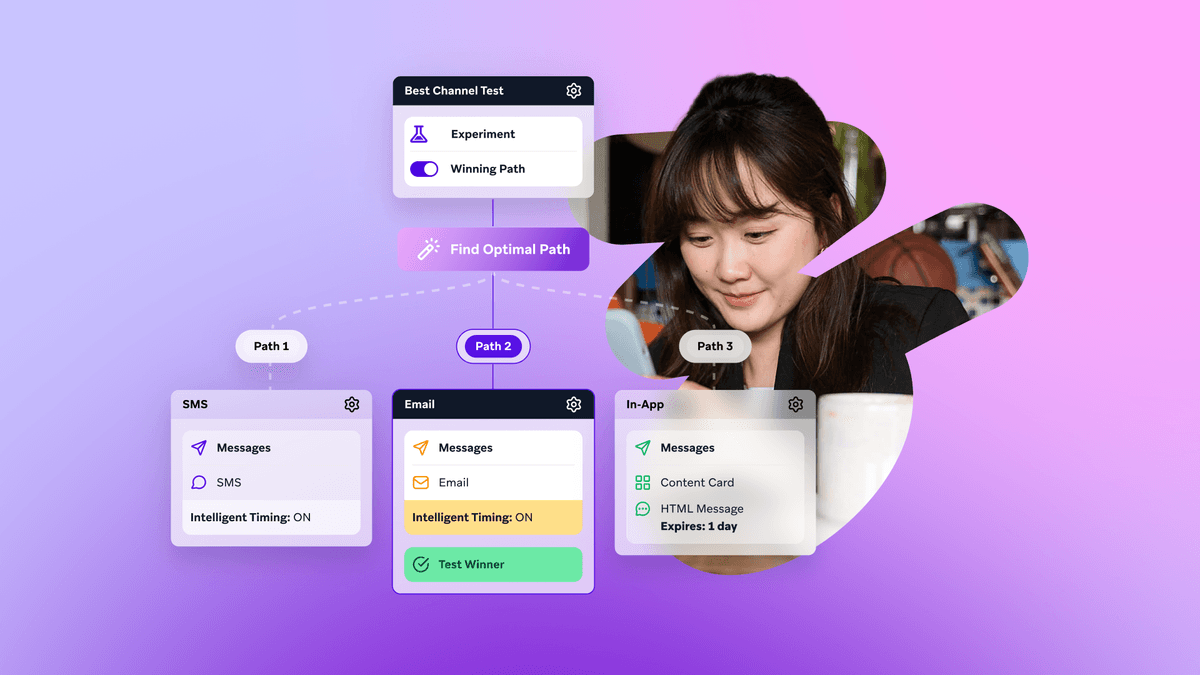

Send-time and channel selection

Testing the timing of messages and the channels used—email, push, SMS, or in-app—reveals the mix that works best for each customer. Reinforcement learning can then personalize these decisions at scale.

Policy and guardrail tests

Frequency caps, tone filters, and compliance rules can themselves be tested and adjusted. This helps guardrails to protect the customer experience without limiting performance.

A framework for the AI experimentation loop

Running AI experiments works best with a repeatable loop. This framework keeps testing focused, safe, and tied to measurable outcomes.

1. Set an objective and KPI

Every experiment should start with a single success measure. This could be conversion rate, revenue per user, retention, or churn reduction. Having one clear KPI prevents dilution and makes results easier to interpret.

2. Design a hypothesis

Frame your test as a simple cause-and-effect statement—If we change X, we expect Y to improve. For example, “If we send reminders via push instead of email, we expect cart completion rates to rise.” This step clarifies the purpose of the experiment.

3. Add guardrails

Guardrails protect the brand and the customer experience. These can include compliance requirements, frequency caps, fairness limits, or tone checks. They keep experiments from producing short-term wins that damage trust in the long run.

4. Plan exposure

Rollouts should move gradually from a small sample to the full audience. A canary release to 1-5% of users can identify early issues before scaling to 20%, 50%, and beyond. This staged approach balances speed with safety.

5. Measure outcomes

Look at uplift, confidence intervals, and long-tail effects—not just immediate gains. A change that boosts click-through today could increase churn later. Careful measurement gives a more complete picture of impact.

6. Decide and document

Act on the evidence—ship the winning version, roll back if needed, or iterate with a new test. Just as important, log learnings in a shared system so insights from one experiment can inform the next.

Methods that fit AI experimentation

Different methods help marketers test AI safely and effectively. The right choice depends on the scale of the experiment, the data available, and the type of decision being optimized.

A/B and multivariate testing

Classic A/B tests compare two versions of a message, model, or offer. Multivariate tests expand this to multiple variables at once. These approaches are easy to understand and provide a solid baseline for experimentation, especially when testing simple changes like subject lines or prompts.

Multi-armed bandits

Bandit algorithms dynamically shift traffic toward the best-performing variant while the test is still running. Instead of waiting for a fixed end date, marketers get faster results and reduce wasted exposure to underperforming versions.

Reinforcement learning experimentation

Reinforcement learning continuously adapts to each customer in real time. Agents learn through trial and error, adjusting decisions for offers, channels, or timing to maximize outcomes like conversions or retention. This approach moves beyond one-off tests into ongoing optimization.

Online vs. offline experimentation and evaluation

Before going live, shadow tests run new models or prompts in the background without affecting customers. Offline evaluation is useful for spotting errors early, while online confirmation validates results in real-world conditions.

CUPED and counterfactuals

Advanced statistical techniques like CUPED (Controlled Experiments Using Pre-Experiment Data) and counterfactual analysis (examining hypothetical “what if” scenarios), reduce noise in experiments. They help teams run tests with smaller samples, shorten experiment time, and uncover more precise impacts.

Together, these methods provide a toolkit that balances speed, safety, and accuracy—allowing marketers to choose the right level of sophistication for each experiment.

Marketing use cases and outcomes

An AI experimentation platform only proves its value when applied to real marketing challenges. From onboarding to checkout, every stage of the customer journey presents opportunities to test, learn, and optimize.

The following examples show how marketers are using experimentation to solve common challenges and achieve measurable outcomes.

Personalized lifecycle onboarding

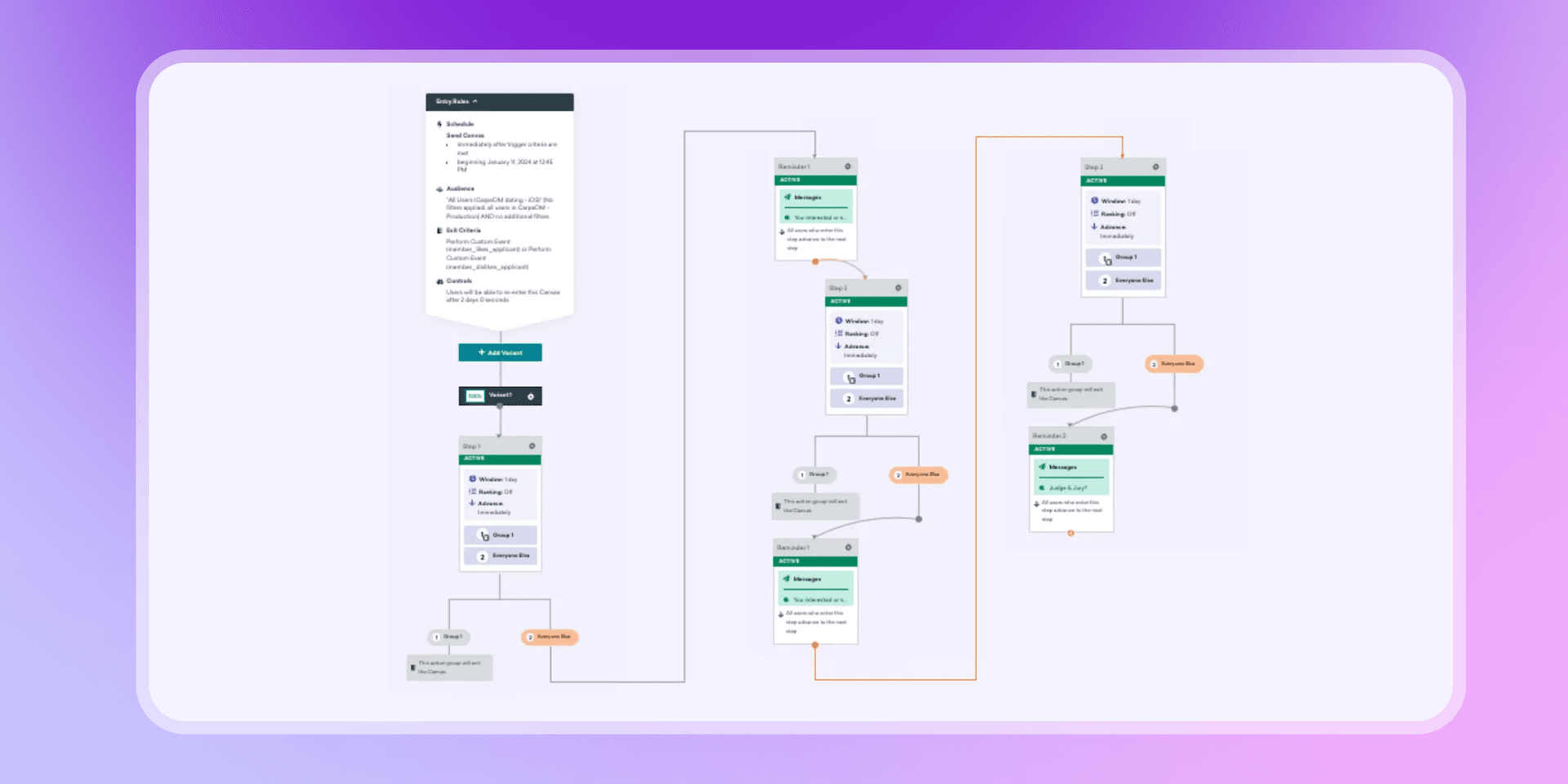

CarpeDM is a member-only dating community built for accomplished Black women and those seeking meaningful relationships. The company combines traditional matchmaking with modern app-based features, including video-first dating, with a mission to elevate Black women, build families, and foster community wealth.

The challenge

As a new service, CarpeDM struggled with drop-off during its involved onboarding process, which required profile reviews, consultations with matchmakers, and background checks. The team needed a way to keep members engaged through each step while driving more conversions.

The strategy

Using Braze Canvas, CarpeDM designed a personalized, cross-channel onboarding flow. Push notifications prompted members to review new applicants, while email campaigns re-engaged applicants who had received “likes.” A/B tests explored different message framings—positioning membership as part of an exclusive community versus highlighting matching opportunities. Controls like frequency caps and Action Paths prevented over-messaging and made interactions feel relevant.

The wins

- 84% of members engaged with the profile review process

- 15% of new customers converted through engagement campaigns

- Improved brand equity by fostering loyalty through timely and meaningful notifications.

By experimenting with timing, framing, and channel mix, CarpeDM turned a potential drop-off point into an engaging, value-adding experience. Personalized onboarding became a driver of long-term loyalty.

Offer personalization

Not every customer responds the same way to discounts, and blanket codes can quickly eat into margins. With AI experimentation, marketers can test different incentive types—such as a percentage off, free shipping, or a value-add perk—across segments and channels. Early experiments reveal which incentives drive conversions for different cohorts, while reinforcement learning adapts over time to fine-tune the mix at the individual level.

For example, a subscription meal-kit company noticed that many customers abandoned their carts after browsing premium recipes. Instead of defaulting to blanket discounts, the team set up an AI experiment to compare incentives. One group received 15% off, another was offered free delivery on their first two boxes, and a smaller group unlocked an exclusive recipe add-on at no cost. Results showed that free delivery produced almost the same conversion lift as a discount but at a lower cost, while the loyalty perk proved most effective for high-value customers who stayed engaged longer.

Churn prevention

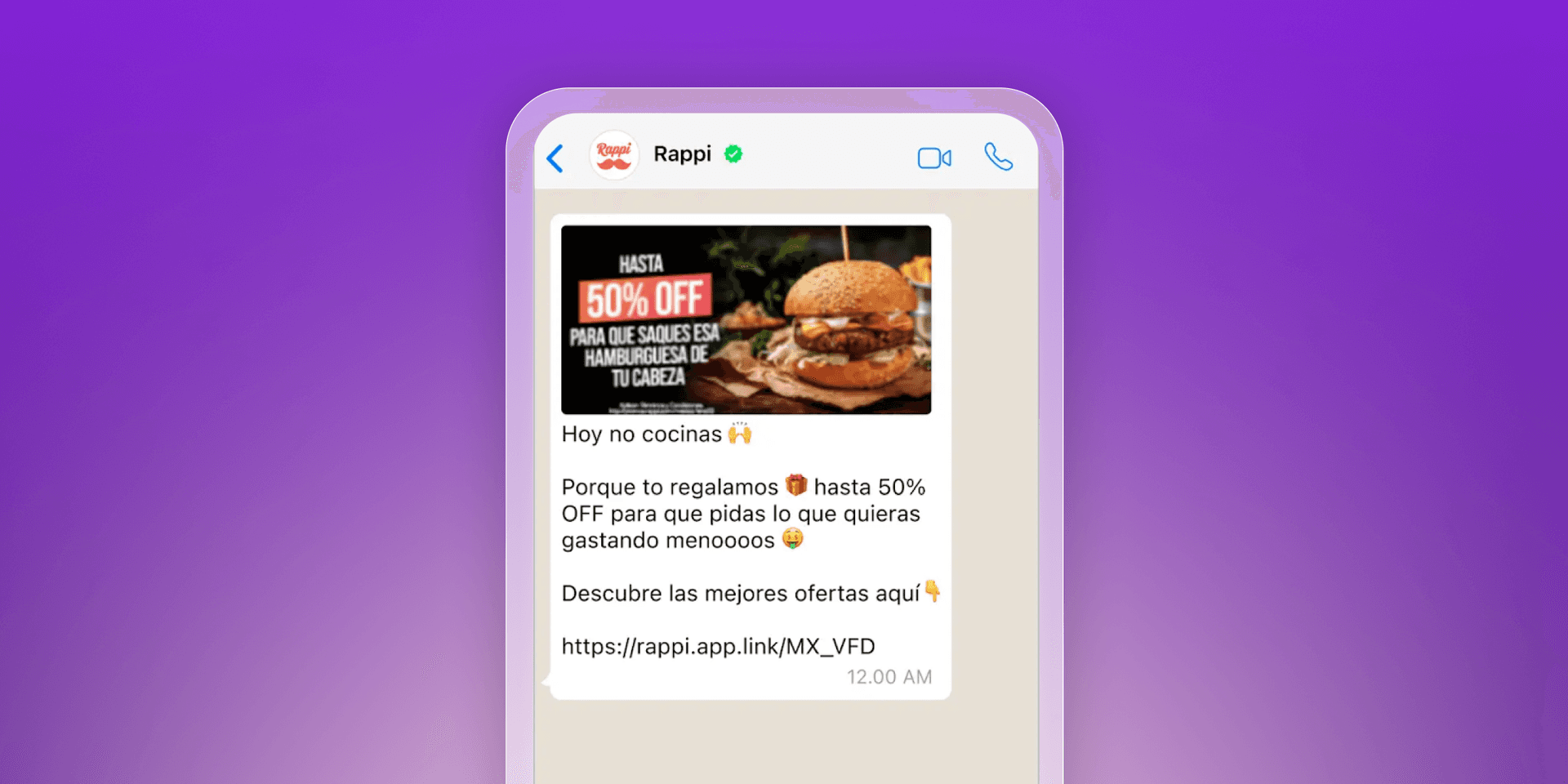

Founded in Bogotá, Colombia, in 2015, Rappi is Latin America’s first “superapp,” connecting millions of users with on-demand delivery, shopping, and services across nine countries. To keep customers coming back in a crowded market, Rappi needed a way to reactivate lapsed users while strengthening loyalty among active ones.

The challenge

Rappi noticed that push and email campaigns were no longer enough to re-engage customers at risk of churn. Lapsed users needed more direct outreach, while active users required timely nudges to keep them purchasing regularly.

The strategy

Using Braze Canvas, Rappi expanded its retention and winback strategy to include WhatsApp, a highly adopted channel in their markets. Campaigns targeted two segments—Momentum (active users) and Reactivation (lapsed users). Personalized deals were sent via WhatsApp alongside existing push and email campaigns. Templates built in WhatsApp Manager were easily integrated into Braze, making it possible to scale personalization quickly and test different approaches.

The wins

- 80% uplift in purchases from lapsed users versus control groups

- 28% lift in users who reactivated and made one purchase within 30 days

- 43% lift in users who reactivated and made two or more purchases within 30 days

By experimenting with new channels and message strategies, Rappi reduced churn and increased purchase frequency. WhatsApp’s high read rate made it a powerful complement to existing channels, proving that adding the right touchpoint can turn at-risk customers into active, long-term users.

Cart and checkout optimization

Timing and channel choice can make or break the final step of the purchase journey. AI experimentation helps teams test not just what message to send, but when and where to send it. By experimenting with channels like push, email, and SMS, as well as timing windows—from immediate reminders to next-day nudges—marketers can find the mix that lifts add-to-cart rates and reduces checkout abandonment. Reinforcement learning then adapts these choices at the individual level, so that each customer gets the right nudge at the right moment.

This is how it might work in practice: A fashion retailer noticed a high rate of abandoned carts during seasonal sales. Instead of sending the same follow-up email to everyone, they set up an AI experiment. Some shoppers received a push notification within 30 minutes of abandoning their cart, others got an SMS reminder the next morning, and a third group saw a targeted in-app message when they reopened the app. Results showed that push drove the fastest conversions, SMS worked best for customers who hadn’t engaged in over a week, and in-app reminders performed well for repeat buyers. Over time, reinforcement learning automatically assigned the right channel and timing to each shopper, increasing overall purchases while reducing unnecessary messages.

How online experimentation for AI works in practice

AI experimentation becomes most effective when it’s built into the way marketers already run campaigns and measure outcomes. A few core capabilities make this possible:

Cross-channel orchestration

Experiments can run across email, push, SMS, in-app, and web within the same customer journey. This makes it possible to compare performance across channels or test how different touchpoints work together.

Continuous learning systems

Reinforcement learning agents take experimentation further by testing multiple variables—such as message type, timing, and channel—at once. Over time, they adapt to each individual’s behavior, turning one-off tests into ongoing optimization.

Built-in guardrails

Brand rules, frequency caps, policy filters, and approval steps help teams test safely. Guardrails reduce risk by preventing over-messaging or off-brand outputs, even when experiments scale to large audiences.

Measurement and visibility

Clear reporting connects experiments to KPIs like retention, CLV, or revenue per user. Marketers can see both immediate results and long-tail effects, making it easier to decide whether to roll out, roll back, or iterate.

Best practices for AI experimentation

Good AI experimentation is repeatable and safe. The practices below help teams move fast while staying accurate and compliant—using risk-controlled rollouts, clear guardrails, and online signals to learn from real behavior. Treat this as a checklist to align on one KPI, capture clean data, and turn wins into durable, cross-channel improvements.

Start with one KPI and a baseline

Pick a single success measure—CTR, CVR, retention, or CLV—and record a pre-test baseline. One KPI keeps analysis clean and decisions clear.

Write crisp hypotheses and stop rules

State the expected change and define guardrails for stopping or pausing a test. This avoids “fishing” for significance or running experiments indefinitely.

Use feature flags and canary releases

Gate every change behind a flag and start with a small audience. Scale from 1-5% to broader cohorts only when metrics and safety checks look solid. These risk-controlled rollouts reduce exposure to bad variants.

Favor online experimentation for AI

Validate offline with shadow tests, then confirm with live traffic. Online signals surface edge cases, latency issues, and real behavior you won’t catch in batch tests.

Design clean cohorts and holdouts

Run cohort testing with clearly separated audiences and persistent holdouts to measure true lift, not noise from cross-exposure or seasonality.

Measure with the right methods

Use CUPED or counterfactuals to reduce variance, and report confidence or credible intervals—not just point estimates. Track near-term lifts and long-tail effects on retention and CLV.

Build strong guardrails and governance

Apply frequency caps, tone and policy filters, fairness checks, and approvals. Guardrails protect brand trust while you experiment at scale.

Start simple, then graduate methods

Use an AI A/B testing platform for simple comparisons. Move to multi-armed bandits when you need faster allocation, and to reinforcement learning experimentation when decisions must adapt per user in real time.

Log everything and share learnings

Version prompts, model IDs, data sources, and variants. Keep a lightweight registry so insights compound across teams and journeys.

Plan rollbacks and recovery

Document rollback steps, thresholds for pausing, and how to communicate changes. Fast recovery matters as much as fast wins.

Connect experiments to orchestration

Feed winning logic back into journeys across email, push, SMS, in-app, and web. Over time, you’ll progress from manual tests to adaptive agentic workflow decisioning.

Final thoughts on AI experimentation

AI experimentation platforms turn AI from a high-potential idea into a disciplined practice. By testing prompts, models, offers, and policies in real time—and tying every decision to KPIs like conversion, retention, and lifetime value—marketers can learn faster, reduce risk, and create more relevant experiences for customers.

The key is consistency. Experiments that are small, frequent, and well-documented build a growing base of insight. Over time, that turns into a competitive advantage, giving you the ability to adapt quickly to changing behavior, scale personalization responsibly, and connect every customer interaction to measurable business outcomes. Braze enables cross-channel orchestration, with essential guardrails baked into both autonomous and multi-agent workflows, empowering you to experiment with confidence.

AI experimentation FAQs

What is an AI experimentation platform?

An AI experimentation platform is a system that lets marketers safely test and optimize prompts, models, offers, and customer journeys in real time, with built-in guardrails and measurement.

How is AI experimentation different from traditional A/B testing?

Traditional A/B testing applies the same variation across large groups. AI experimentation adapts in real time, often at the individual level, using methods like reinforcement learning and multi-armed bandits.

What can marketers test with AI?

Marketers can experiment with prompts and copy, model selection, offer logic, channel mix, timing, and even guardrails like frequency caps or tone filters.

What KPIs matter for AI experimentation?

The most common KPIs are CTR, CVR, retention, and CLV. These connect experiments directly to business impact.

How do reinforcement learning and bandits fit into AI experimentation?

Reinforcement learning and bandits allow experimentation to go further than static tests. Bandits allocate more traffic to winning variants during a test, while reinforcement learning adapts decisions continuously for each customer.

How does AI experimentation support customer engagement?

AI experimentation supports customer engagement by tying every test to live behavior, so marketers can deliver more relevant, timely, and personalized interactions.

What features should an AI experimentation platform include?

Key features an AI experimentation platform should include are, cross-channel orchestration, continuous learning systems like reinforcement learning, built-in guardrails, and clear measurement tied to business KPIs.

What are the risks and guardrails in AI experimentation?

Risks in AI experimentation include over-messaging, off-brand outputs, or biased results. Guardrails such as frequency caps, approval workflows, and fairness checks reduce these risks and keep experiments safe.

When should reinforcement learning experimentation be used vs. A/B testing?

A/B testing works well for simple, controlled comparisons. Reinforcement learning is best when experiments need to adapt in real time at the individual level, such as testing offers, channels, or timing.

Be Absolutely Engaging.™

Sign up for regular updates from Braze.

Related Content

Article13 min read

Article13 min readBraze vs Salesforce: Which customer engagement platform is right for your business?

February 19, 2026 Article18 min read

Article18 min readBraze vs Adobe: Which customer engagement platform is right for your brand?

February 19, 2026 Article7 min read

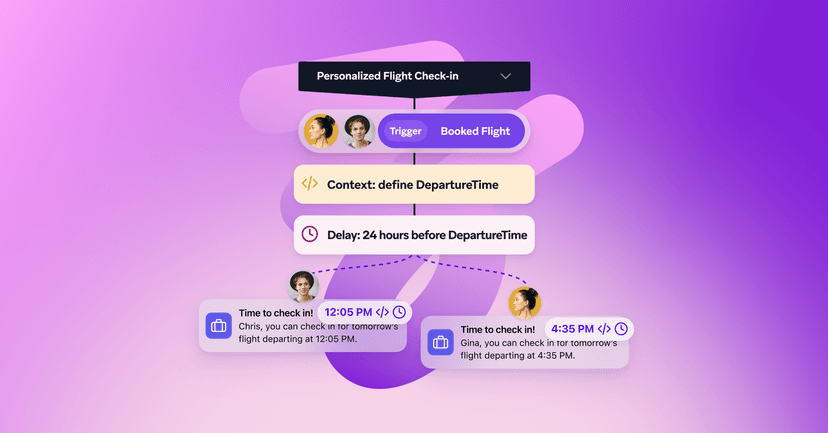

Article7 min readEvery journey needs the right (Canvas) Context

February 19, 2026