When A/B Testing Isn’t Worth It

Published on December 13, 2015/Last edited on December 13, 2015/8 min read

Team Braze

For marketing geeks like you and me, nothing gets the blood flowing like an A/B test. They’re quick to run and it’s deeply satisfying to watch the results stream in. Once we’ve begun, we’re off to the races, and it’s hard to imagine how we ever did without. If only we could do this with everything: play two reels of our big life decisions at once to see which choices were the right ones.

But without careful consideration, A/B testing can actually just become a waste of our valuable time. Here’s how to get the most out of A/B testing.

What is A/B testing? How does it work?

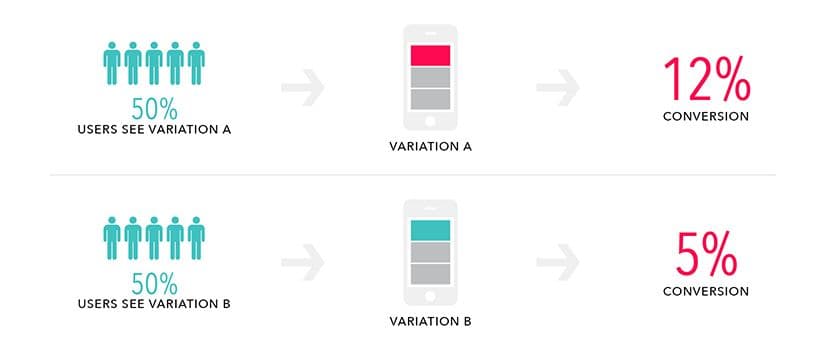

A/B testing allows you test an experience or message to see if it can be improved upon. In an A/B test, you present users with two versions of a site, app, or feature (version A vs. B). The version that performs best, by whatever metric you’re tracking, wins.

One can test just about anything: buttons, fonts, calls to action, editorial content styles, and even next-level details like scroll speed, by placing one version in front of the first set of users, usually the control (A), and a variant (B) in front of a second set of users. Traffic is randomized as much as possible so the only variant you’re testing is the one changed in variant B. You can test multiple variables and/or multiple variants and this is known as multivariate testing, a topic for another day.

Use A/B testing to test a hypothesis

Use A/B testing to test subjective ideas for how to resolve an issue with objective, data-based evidence that will confirm whether the ideas are sound.

Done well, A/B testing follows a basic recipe. Begin with a problem you’d like to solve. Maybe you have data or user research suggesting that there’s an issue, or just an informed hunch derived from knowledge of your product and audience.

Next, develop a hypothesis identifying what appears to be the best solution to your problem. Then, run your test to gather empirical evidence that will ultimately prove or disprove your hypothesis. Finally, take action based on what you’ve learned.

What to look out for before you embark on an A/B test

In De Tocqueville’s 1835 study of American character (Democracy in America), he wrote that in the U.S., “public opinion is divided into a thousand minute shades of difference upon questions of very little moment.”

De Tocqueville, of course, could have had no idea how relevant his remarks might become in the context of digital and mobile marketing. Some results simply don’t warrant the time they take to unearth. Know when it’s time to A/B test, and when your time may be better spent elsewhere.

4 reasons not to run a test

1. Don’t A/B test when: you don’t yet have meaningful traffic

A/B testing has become so ubiquitous, it’s hard to imagine the world of mobile or product development without it. Yet, jumping into the deep end of the testing pool before you’ve even gotten your ankles wet could be a mistake.

Statistical significance is an important concept in testing. By testing a large enough group of users, you’ll determine what the average user prefers and make it less likely that the preference you identify is actually the result of sampling error.

Did you see movement because users actually prefer the variant to the control? Or, for example, did you unknowingly serve Variant A to people who love cats and Variant B to people who hate cheeseburgers, meaning your results don’t actually tell you anything about your average user? To protect against this kind of sampling error, you need a statistically significant sample size. How do you figure out whether your results are significant enough to warrant action? Math!

You can begin by using this free A/B significance calculator (or this one, if you prefer). Each calculator compares visitors and conversion on both sides of your A/B variant, does a bunch of back end math, and gives you a “confidence level” expressed as a percentage, letting you know that your test either has or hasn’t yielded results that you can confidently act upon.

Testing something you expect to make a huge difference in conversion rate is usually doable with lower traffic, but to test small changes, like the color of a button, you’ll need a larger sample size. If you’re concerned, play around with this calculator to see if your traffic is where it should be before you run an A/B test.

If you don’t have enough users to inform meaningful results, your efforts might be better spent attracting more customers instead of experimenting. If you do decide to go ahead and run a test while your user base is still small, you may need to leave your test live for many weeks before you’ll see meaningful results.

2. Don’t A/B test if: you can’t safely spend the time

Andrew Cohen, Founder & CEO of Brainscape, and instructor at TechStars and General Assembly, says, “Performing split tests is simply a management-intensive task, no matter how cheap and efficient A/B testing plugins … have become. Someone needs to devote their time to determining what to test, setting up the test and verifying and implementing the results of the test.”

Although these tasks can be executed with relative ease, Cohen explains, it still requires plenty of, “mental bandwidth, which is the scarcest resource at any company (especially an early-stage startup).”

Spend time up front deciding what you should test, so you are making the best use of your A/B testing time.

3. Don’t A/B test if: you don’t yet have an informed hypothesis

Gather information. Identify your problem. Define a hypothesis. Then test to see if you’re right. Treat the A/B test like real science! A good scientist never begins an experiment without a hypothesis.

To define your hypothesis, know the problem you want to solve, and identify a conversion goal. For example, let’s say your customers tend to drop off at a certain point in the conversion funnel.

The problem: customers load items into their cart, but never finish the purchase process.

Based on a bit of market research and your own well-informed judgement, you believe that if you add a button that says, “finish my purchase,” you’ll be able to increase conversion. It’s also important to define your success metric. What’s the smallest increase in conversion you’d be happy to see? (And why that number? What does it mean for your business as a whole to win that increase?) This ties back into your statistical significance calculations as well. For this example, say you want to increase conversion by 20%.

A scientific hypothesis is usually written in if/then format. So your hypothesis becomes, “If I add a ‘finish my purchase’ button, then 20% more people will follow through with the purchase process.”

At the end of your test, you’ll have some decisions to make. If your test is positive and confirms your hypothesis, congratulations! You win. Your hypothesis is now a proven theory (proven within the percentage confidence level you achieved, of course). If your business is agile enough, you can institute a permanent solution right away. You may wish to keep testing smaller variants to see if there’s more room to improve upon your first success.

If your test is negative, and your hypothesis didn’t hit the mark, you also win! This means your control is the winning formula, and you can keep using it with confidence. Again, though, you may wish to test different variants if you’re not getting the results you need. See if there’s another way to solve your problem, and develop a new hypothesis.

If your test is inconclusive, revisit your problem. Are you sure the pain point is where you think it is? Do you have enough traffic to inform statistically significant results? Remember, that the answer to what ails your product may not necessarily be in an A/B test.

4. Don’t A/B test if: there’s low risk to taking action right away

Lynn Wang, head of marketing at Apptimize says, “A/B testing should be skipped in situations where you know that an idea almost certainly will improve your app and the risks associated with … implementing the idea are low.” She adds, “There is no reason to spend time and resources to test something that probably is good and has low risk. Jumping to implementation is perfectly advisable.”

This is especially useful to remember if your time is scarce. Keep in mind that a given result may be true, and at the same time, it may be unimportant.

A good tool is only as useful as its smart application

A/B testing is an incredible resource. Smart, simple actions taken based on clear results from well-applied tests have catapulted success across the digital landscape. Successful businesses know when it’s time to be patient, and run a meaningful test. They also know when to rely on their intuition or other sources of information, and move forward without the supposed safety net of a protracted or premature testing period that actually won’t add any value.

Be Absolutely Engaging.™

Sign up for regular updates from Braze.

Related Content

Article16 min read

Article16 min readChoosing the best AI decisioning platforms for 2026 (across industries)

February 12, 2026 Article3 min read

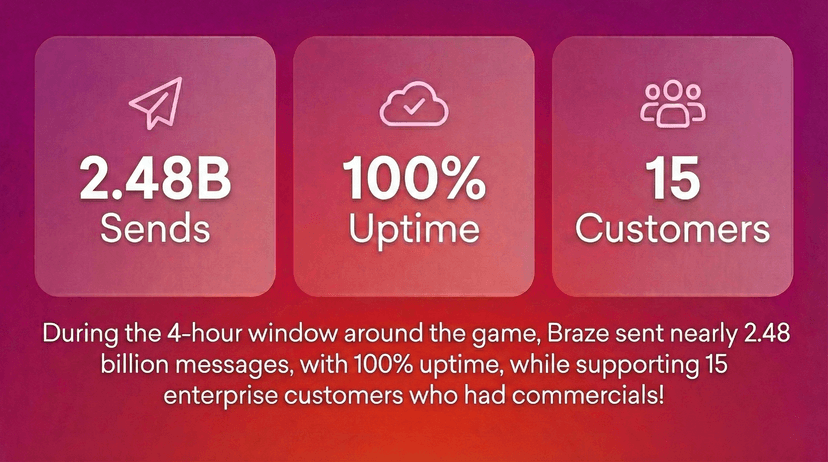

Article3 min read2.4+ billion sends, zero fumbles: How Braze supports leading brands during the big game

February 09, 2026 Article4 min read

Article4 min readBeyond Predictions: Why Your Personalization Strategy Needs an AI Decisioning Agent

February 09, 2026