A/B vs. Multivariate Testing: When to Use Each

Published on March 01, 2016/Last edited on March 01, 2016/4 min read

Team Braze

Dear reader: This blog post is vintage Appboy. We invite you to enjoy the wisdom of our former selves—and then for more information, check out our new Cross-Channel Engagement Difference Report.

These days, marketing decisions need to happen quickly. Audience attention spans are fleeting, and information overload is the new norm online. As marketers, it’s essential that we make sure that our marketing communications are effectively winning over and converting our audiences. That’s where A/B and multivariate testing enter the picture.

Testing can help you:

- Identify the best copy or visuals to encourage a desired conversion in any one campaign

- Test marketing campaign ideas before deploying them widely

- Find untapped opportunities to clarify your messaging with your audience

But first, you have to know whether to run an A/B test or a multivariate test, and how to do each. The difference between these two methodologies is often not well understood (hey, not all marketers have formal training as statisticians or data analysts). So let’s take a look.

The differences between A/B and multivariate testing

While the big-picture goals of both types of testing are similar, their specific applications are not. A/B testing provides a simple way to test two or more concepts, page designs, or features (i.e. two versions of an app landing page, in-app calls to action, or ad units for advertising campaigns). Multivariate testing allows businesses to determine which combination of variables perform best.

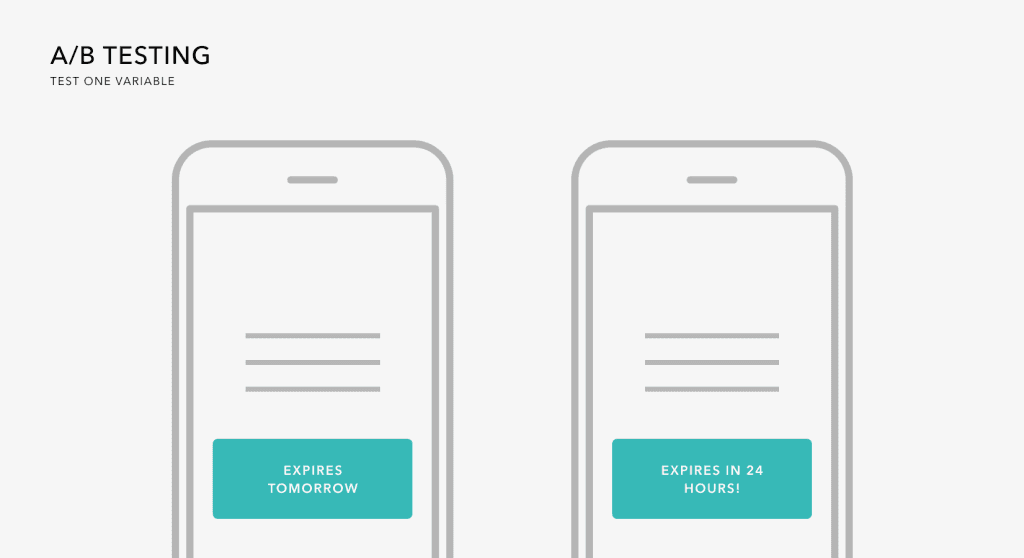

A/B TestingTest just one variable of your campaign:

- Example: Differ your CTA message from original (A) to variant (B)

Or test the differing impacts of alternate campaign designs or approaches:

- Example: Send in-app messages that are different in design to groups users, to see which design sparks the better response

- Example: A longitudinal test in which a test group will receive your push notifications, but a control group of users will get no push messages at all

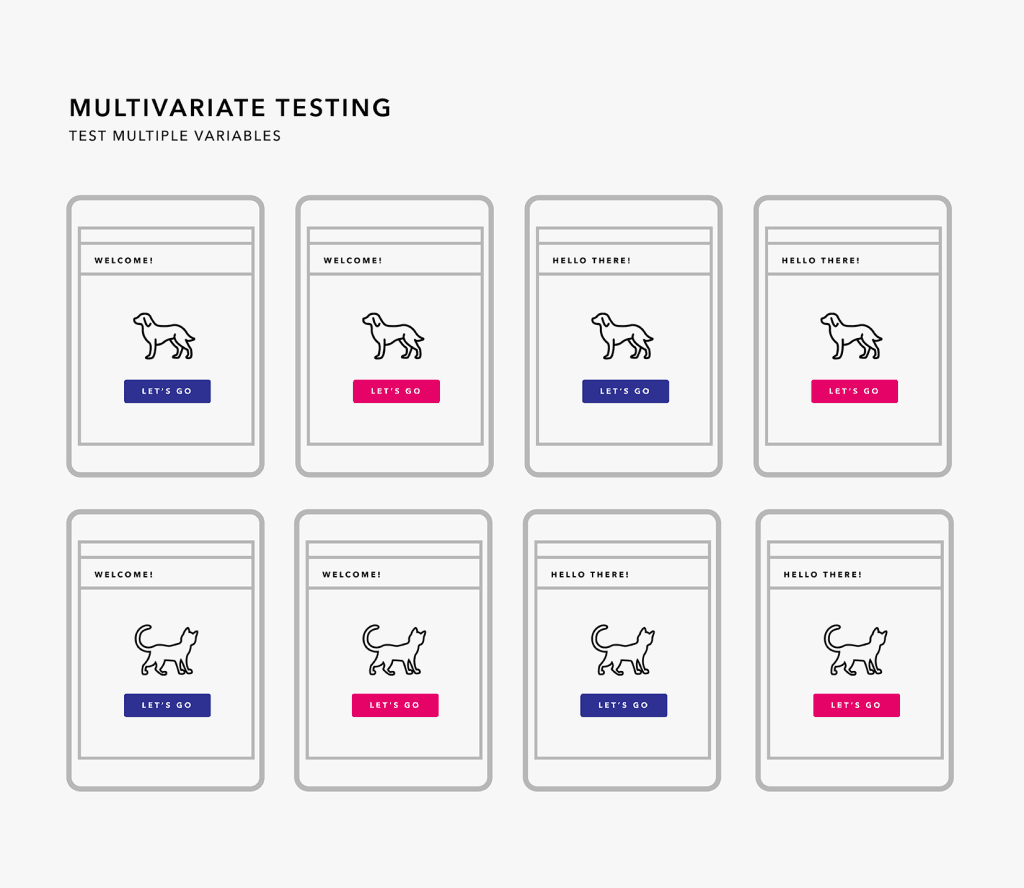

Multivariate TestingTest combinations of variables within a single campaign or message:

- Example: Test the subject line of your email message, plus the image that accompanies your text, plus the color of the CTA button

Pros and cons

Both kinds of testing have their advantages and potential disadvantages. Take a look at factors that might influence you to choose one over the other for any specific campaign.

A/B TestingMultivariate TestingPros

- Relatively simple to design and execute

- Can help settle debates around campaign tactics when there is one idea in question

- Possible to generate statistically significant results with smaller traffic samples (relative to a multivariate test)

- Provides straightforward results that are easier for non-quantitative business teams to interpret and implement

Cons

- Limited to single variable and a few variants of that variable (usually just 2 variants)

- Not possible to see the interaction between multiple variables within the same campaign

Pros

- Provides insight into interactions between multiple variables

- Creates granular picture about which campaign elements impact performance

- Enables marketers to compare many different versions (upwards of dozens) of a campaign

Cons

- In general, requires more traffic than A/B testing

- Can quickly become unfeasible to manage with too many combinations–even for high-traffic campaigns

- Can take relatively more time to get up and running

- Can be overkill when an A/B test would be sufficient

Designing your experiments

Marketers can fall into the trap of wanting to just get started with testing, without defining their plans and techniques upfront. The end result? Bad data, missed directions, and inconclusive findings.

Make sure you take the time upfront to map out your goals for your marketing experiment. When you’re running a conversion optimization study, it’s easy to get stuck in a mode of perpetual exploration. You’ll want to give yourself enough focus and structure upfront. Success begins with establishing the right goals.

Creating an ongoing strategy

Once you get into a basic routine running your first A/B and multivariate tests, you may want to build a testing process that’s repeatable and useful.

Here are some tips:

- Look for ways to enhance your intelligence with the software you use to execute your tests and send your campaigns. Intelligent selection, for instance, is a feature in Appboy’s mobile marketing suite that automatically adjusts to send the best-performing version of a message to the remainder of recipients as the campaign sends.

- Be prepared to take a step back from failed tests or inconclusive results. A “failed” marketing experiment isn’t necessarily useless: it’s an opportunity to learn and grow. If there’s not a “winner” in your A/B or multivariate test, what does that tell you, instead? Perhaps to focus on a different aspect of your campaign than what you originally thought needed testing?

Expect overall improvements in campaign performance to require a long-term, iterative process. Learn, grow, explore, and find creative ways to reach your customers.

Be Absolutely Engaging.™

Sign up for regular updates from Braze.

Related Content

Article16 min read

Article16 min readChoosing the best AI decisioning platforms for 2026 (across industries)

February 12, 2026 Article3 min read

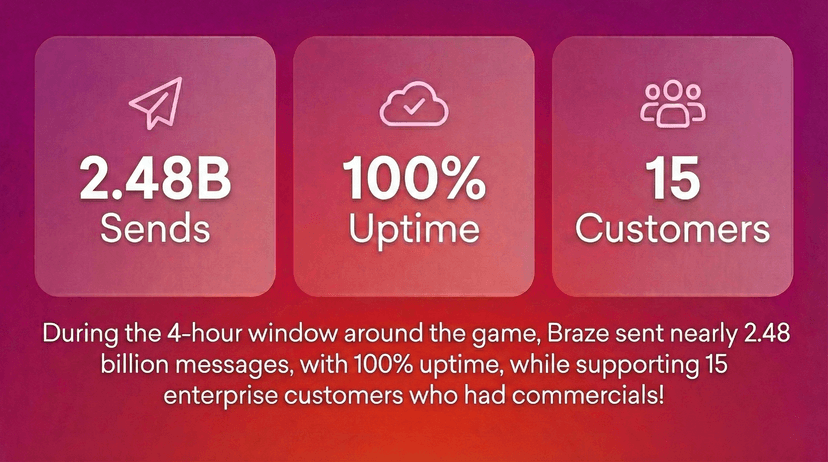

Article3 min read2.4+ billion sends, zero fumbles: How Braze supports leading brands during the big game

February 09, 2026 Article4 min read

Article4 min readBeyond Predictions: Why Your Personalization Strategy Needs an AI Decisioning Agent

February 09, 2026