Are Your Go-To Mobile Marketing KPIs Tricking You?

Published on April 12, 2016/Last edited on April 12, 2016/7 min read

Team Braze

Next time you look at your marketing dashboard, take the time to play devil’s advocate: your go-to marketing KPIs may be steering you in the wrong direction. The reason?

Metrics represent a form of storytelling. Before the data points you’re measuring were numbers on a screen, they were a collection of moments, stories, or events. Important business concepts like retention, churn, stickiness, cost-per-acquisition, and lifetime value are stories that marketing analysts and data scientists build ways to tell.

Behind every data point is a set of assumptions and methodologies for calculating the metrics that you’re tracking—and given that there are sometimes multiple accepted ways to measure the same idea (LTV, for example), you’re likely making mathematical tradeoffs.

As a result, your favorite KPIs may be tricking you or communicating an incomplete story. It’s important to understand the secrets that your key marketing metrics may be keeping. Here’s how:

Dig into the operationalization

The process of getting from business goal (e.g. increase customer engagement) to tangible, trackable metric (e.g. number of returning users in a month, or number of sessions per returning user, or number of conversions per returning user, or…) is called operationalization. The first step is to come up with an idea that you want measured. Then, brainstorm how to quantify these ideas, generating a list of options. After you have a list of potential ways to measure your idea, you can assess tradeoffs (i.e. how technically feasible it is to measure something and whether that number is the best representation of what you’re trying to capture). After this process, which may require some testing and iteration along the way, you’ll arrive at the way or ways that you want to track your metric.

For a non-marketing example of operationalization that you’re likely familiar with, take a look at the U.S. News and World Report Education rankings, which measure “academic excellence.” Digging into the numbers, you’ll see that the company looks at a few different dimensions to compile its overall scores, including alumni donation rates, academic peer ratings, and more. U.S. News collects this information through self-reported surveys.

For a marketing example of what this process looks like, take a look at the help center for Google Analytics, where the company discloses how it measures website visits, time on site, return visits, and more from both technical and analytical perspectives. You can clearly see how the Google Analytics tracking code works to translate abstract concepts into quantifiable numbers and then generate the numbers that you see when logging into your dashboard.

Usually, in their busy day-to-day, marketers see this end metric—not what’s happening behind the scenes. But it’s important to know exactly what you’re quantifying so that you can avoid taking a wrong turn based on incorrect assumptions or conclusions.

Know the ways in which data can be misunderstood

You’ve probably learned that when you’re working with a dataset, it’s important to sanity check your numbers. But you’re not done yet. You need to examine how your numbers came to be. It might be the case that your experiment design has flaws.

Additionally, even assuming your data is showing you what you think it is, you might still interpret that data incorrectly. Here are some common culprits to have on your radar:

Bias: This statistical concept reflects a fundamental idea of sampling—that the groups that you’re analyzing should be representative of your overall population. In a marketing context, biases can occur for a variety of reasons. For instance, the people in your sample might share a trait in common that you aren’t trying to track or include in your current analysis. Here’s an example: You could end up generalizing purchase behavior for all your customers, even though you actually have a higher proportion of wealthier individuals in your sample than your average customer base.

Confounders: You may be fixated on the relationship of two variables, not realizing that there’s a hidden third variable that’s driving the correlation. For instance, you may notice that your sales are spiking on summer holidays and conclude that holidays are top days for purchases—but in reality, sales are being influenced by the fact that it’s a hot day.

Logical fallacies: Most likely, you learned about these in elementary or junior high school (they’re very much back to haunt you in your marketing analytics career). Here are a few of the common ones that could rear their heads in your data analysis:

- Ecological fallacy: Making conclusions about an individual based on a group.

- Black or white fallacy: Assuming that two states are the only possibilities when in fact there are more options.

- Perceived cause: Assuming something is causing another thing, but in fact there is no causal relationship. This fallacy is related to the expression “correlation is not causation,” which you may have heard in statistics or science classes in times past.

Walk the talk

Playing devil’s advocate is often easier said than done: you may find yourself disagreeing with the c-suite, spending more time analyzing your dataset, and agonizing over the untold stories that you’re worried are slipping through the cracks. You may be under pressure to pull numbers for a quarterly report or PR campaign, or you may be anxious to make a campaign judgment call based on what you’re reading in your analytics dashboard.

Nevertheless, it’s important to stand your ground and make sure that you’re making an accurate forecast based on the intricacies of your dataset. Otherwise, your forecasts, projections, and even measurement of results may be off base.

To get you started, here are a few metrics that are often interpreted incorrectly.

MetricCommon interpretationPossible hidden storyWhat to do about itHigh retention rateHigh retention rates suggest that your product is making your customers happy. You might think that you’re in a good position.It’s your most valuable customers that are churning and your lowest value customers who are sticking around, at least for now.Look at the statistics of your retained sample vs. the churned sample. Then make a strategy for a campaign or campaigns that aim to keep your valuable customers around.High churn rateHigh churn rates may lead you to believe that there’s something wrong with your product.You may be attracting the wrong customer base (i.e. your product/market fit is off), or you may be losing users to a new competitor from whom you need to differentiate.Analyze how your churn rates vary across your different customer segments. Determine whether there are any clear patterns, such as around attribution channel or demographics.Increasing Daily Active Users (DAU) or Monthly Active Users (MAU)Your users are opening your app, so they must be engaged.They’re opening your app, but they’re not completing any valuable conversions while they’re in the app.Explore what your users are doing after they log into your app. You may decide to track new metrics that highlight varying levels of “active” (i.e. people who spend a certain number of minutes within the app, people who engage with a particular feature, etc.).Increased stickiness after a feature launch or updateThe new feature or update is causing the increase in stickiness because it has improved the product.A successful messaging campaign, ad spend, or other cause could be contributing to the increase in stickiness.Make sure you’re only attributing causation when you can actually isolate all the variables. Otherwise, you might just be looking at a coincidence or correspondence.Increased uninstalls after a campaignThe campaign delivered just before the uninstalls, therefore the campaign caused the jump in uninstalls and something about it was damaging to your customer relationships.Uninstalls are actually not necessarily reported as they occur. Both Apple and Google use methods that may cause a time delay between an uninstall and when you know about it. An uninstall reported on March 30 could have happened at any time prior to March 30, including long before that March 29 campaign.You can certainly watch for patterns or jumps in your uninstalls, but don’t make the fallacy of deciding a certain increase in uninstalls means a certain campaign was the culprit.

Before you go

As you practice interpreting and working with data, you’ll start to get a sense for how your metrics might be tricking you. If you make a mistake, learn from it. And remember to keep your team in the loop—as company priorities change, it’s very likely that the KPIs that are more important will change as well.

Be Absolutely Engaging.™

Sign up for regular updates from Braze.

Related Content

Article13 min read

Article13 min readDemographic segmentation: How to do It, examples and best practices

January 30, 2026 Article6 min read

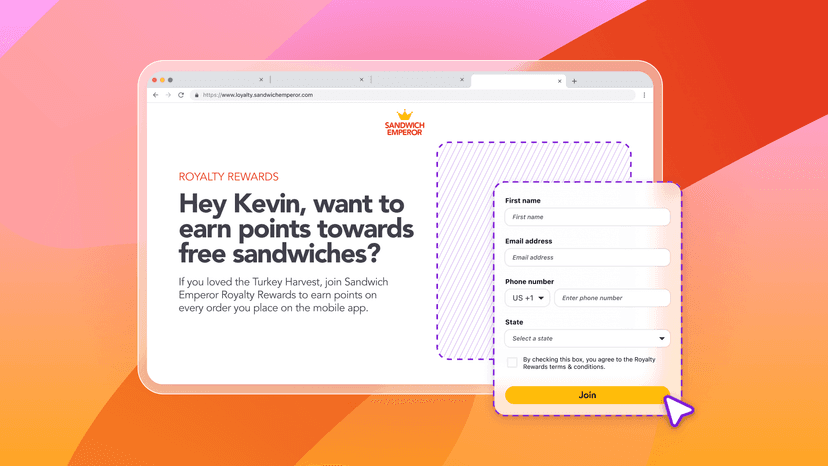

Article6 min readLanding pages made simple: How to build your first Braze Landing Page

January 30, 2026 Article9 min read

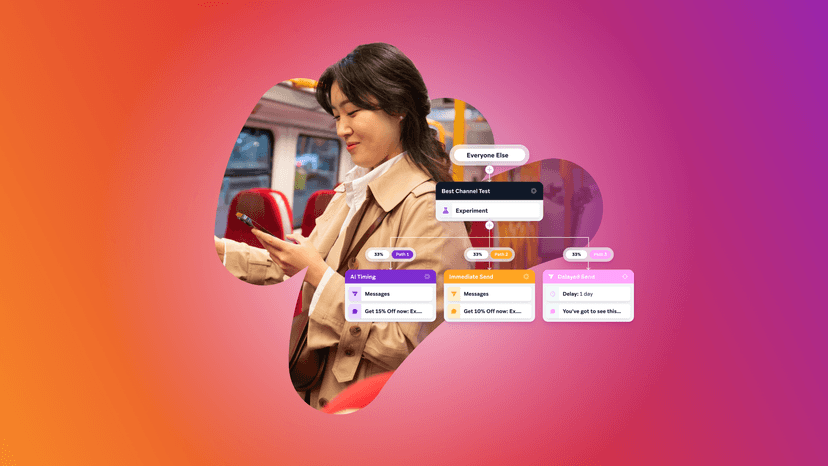

Article9 min readAI decision making: How brands use intelligent automation to scale personalization and drive revenue

January 30, 2026